Tribhuvanesh Orekondy

I am a research scientist at Qualcomm AI research in Switzerland. I currently work on Large Language Models (efficiency, reasoning, verification, multi-turn, long-horizon, post-training). I previously worked on data-driven differentiable simulations, neural rendering, and generative models.

I completed by PhD in 2020 at the Max Planck Institute for Informatics in Computer Vision and Machine Learning and was advised by Mario Fritz and Bernt Schiele. Previously, I graduated with a Master's degree in Computer Science from ETH Zürich.

Email · Google Scholar · Github · LinkedIn · MPI

News

- Demo at NeurIPS '25: Efficient LLM Reasoning on the Edge

- Paper at ICLR '25: Differentiable and Learnable Wireless Simulation with Geometric Transformers

- Organizing NeurIPS '24 workshop on Differentiable Simulations

- New tech report: Simulating, Fast and Slow: Learning Policies for Black-Box Optimization

- 2x workshop papers at NeurIPS: Active Learning and Simulator Switching

- Paper at GLOBECOM '23: Transformer-Based Neural Surrogate for Link-Level Path Loss Prediction from Variable-Sized Maps

- Paper at ICLR '23: WiNeRT: Towards Neural Ray Tracing for Wireless Channel Modelling and Differentiable Simulations

Research

I currently work on LLM (notably efficiency, sampling, verification, multi-turn, long-horizon, agents). Previously, I worked on data-driven differentiable simulations, neural rendering, and generative modelling. During my PhD, I focused on topics in trustworthy and reliable ML (adversarial ML, privacy-preserving techniques).

Amin Rakhsha, Pietro Mazzaglia, Thomas Hehn, Fabio Valerio Massoli, Arash Behboodi Tribhuvanesh Orekondy,

arXiv, 2026

paper · bibtex

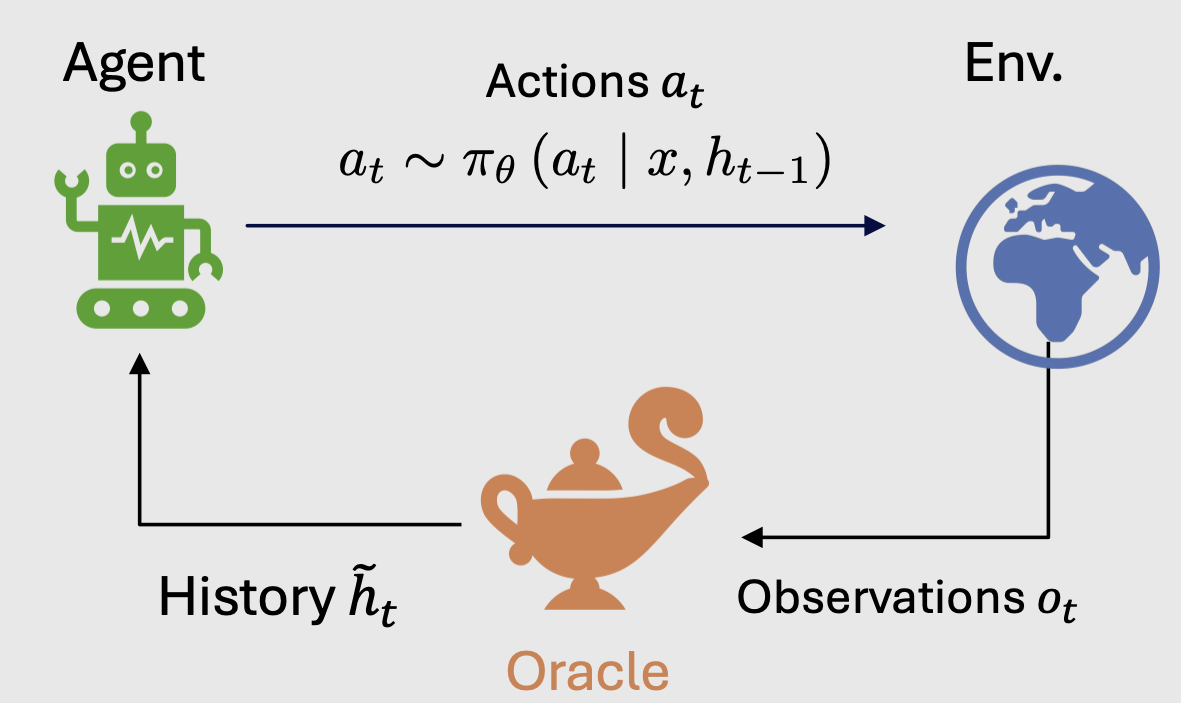

An oracle-based counterfactual framework and controlled multi-turn environments to isolate which skills (e.g., planning or state tracking) truly matter for long-horizon LLM-based agent performance.

Yelysei Bondarenko*, Thomas Hehn*, Rob Hesselink*, Romain Lepert*, Fabio Valerio Massoli*, Evgeny Mironov*, Leyla Mirvakhabova*, Tribhuvanesh Orekondy*, Spyridon Stasis*, Andrey Kuzmin, Anna Kuzina, Markus Nagel, Corrado Rainone, Ork de Rooij, Paul N Whatmough, Arash Behboodi, Babak Ehteshami Bejnordi

NeurIPS Expo Demo, 2025

arXiv, 2026

paper (coming soon) · Expo demo

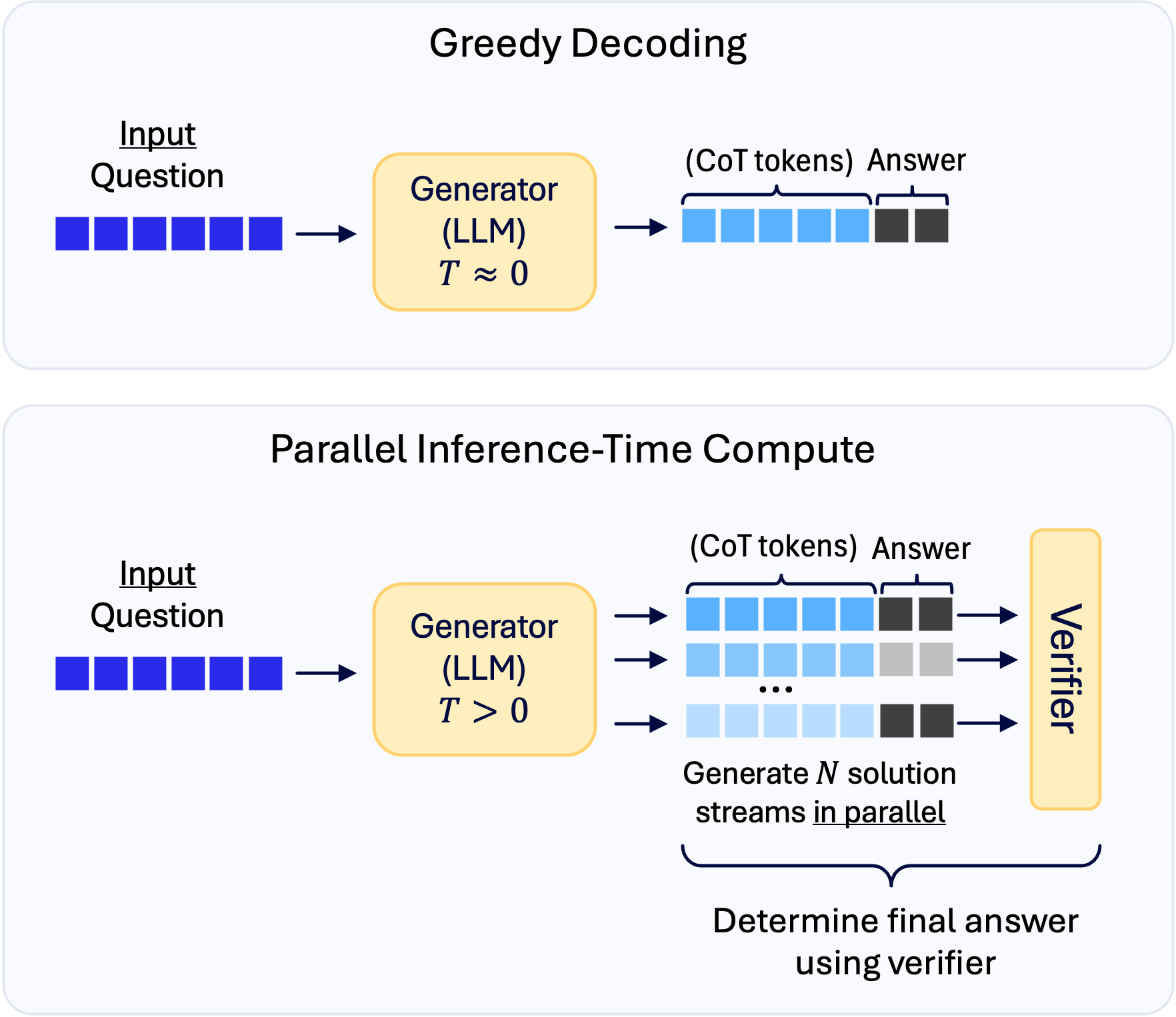

We show how to make LLM reasoning practical on edge devices by combining lightweight LoRA-based reasoning adapters, budget-aware training, dynamic adapter switching, and optimized parallel decoding to achieve efficient, accurate reasoning under tight memory, compute, and latency constraints.

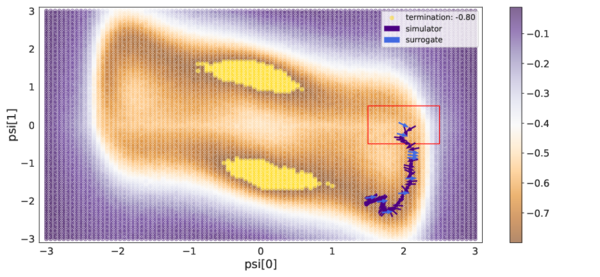

Thomas Hehn, Markus Peschl, Tribhuvanesh Orekondy, Arash Behboodi Johann Brehmer

ICLR, 2025

paper · bibtex

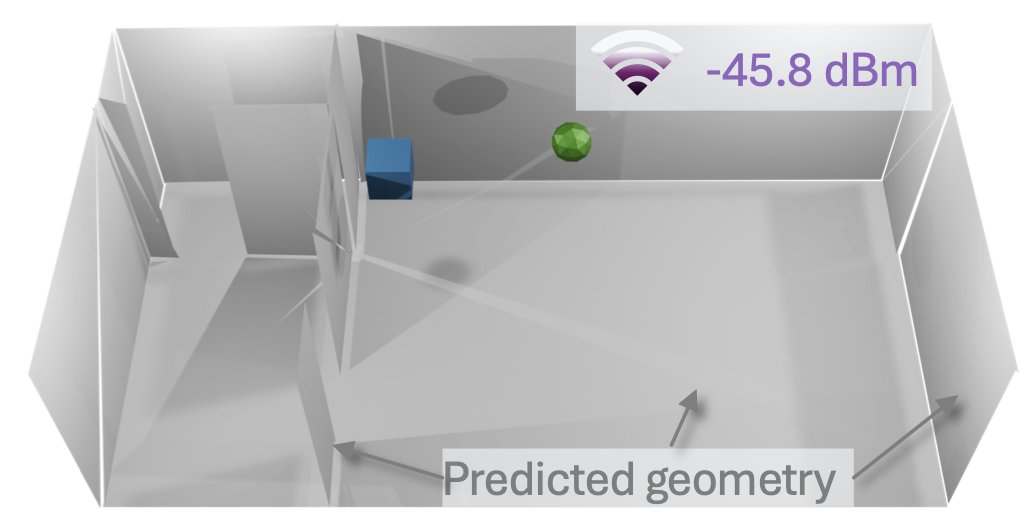

Geometric DL transformer based surrogate simulator for modelling joint distributions between 3d environment and its influence on EM waves.

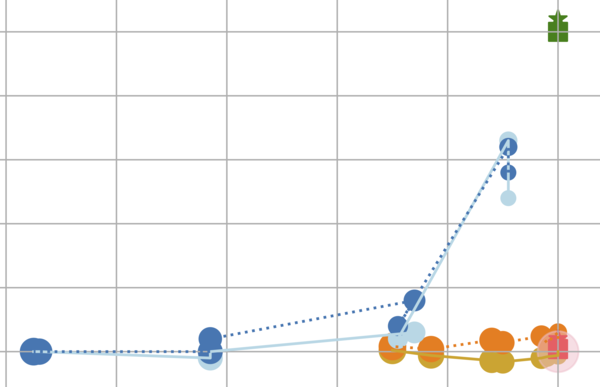

Fabio Valerio Massoli, Tim Bakker, Thomas Hehn, Tribhuvanesh Orekondy, Arash Behboodi

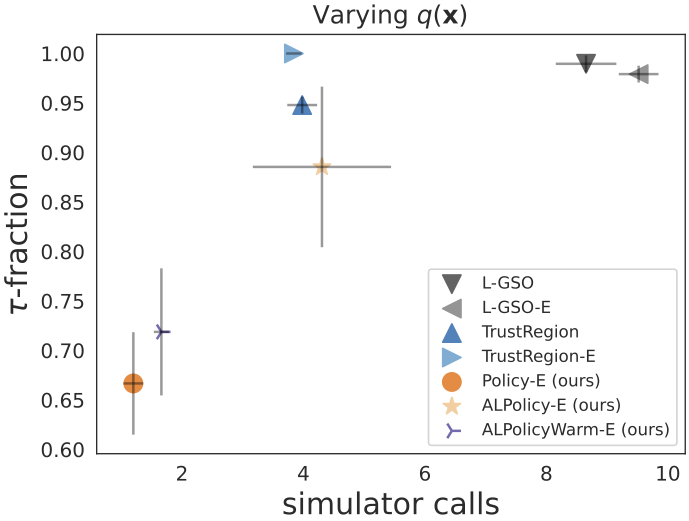

arXiv, 2024

paper · bibtex

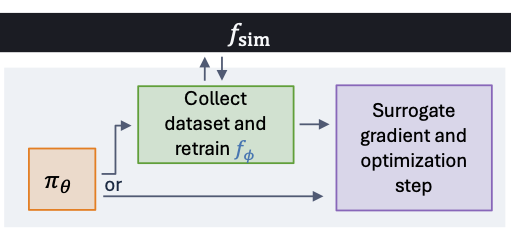

Solving black-box optimization problems on stochastic simulators using neural surrogates and reinforcement learning.

Tim Bakker, Thomas Hehn, Tribhuvanesh Orekondy, Arash Behboodi, Fabio Valerio Massoli

NeurIPS ReALML Workshop, 2023

paper · bibtex

We reinforcement learn active learning policies to guide local-surrogate-based inverse problem optimisation.

Tim Bakker, Fabio Valerio Massoli, Thomas Hehn, Tribhuvanesh Orekondy, Arash Behboodi

NeurIPS Deep Inverse Workshop, 2023

paper · bibtex

Reinforcement learning policies to guide surrogate-based inverse problem optimisation.

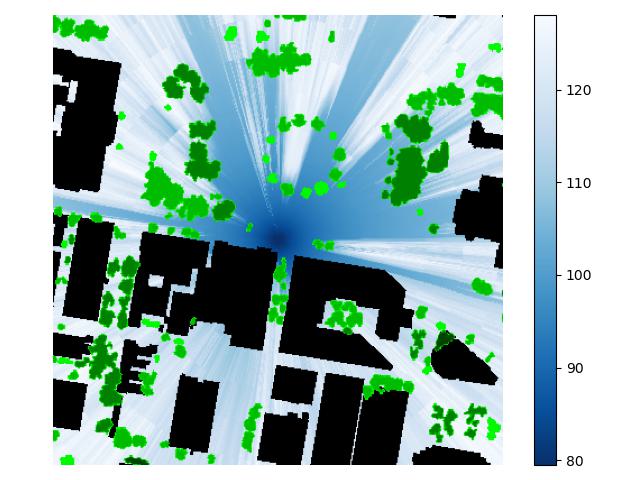

Thomas Hehn, Tribhuvanesh Orekondy, Ori Shental, Arash Behboodi Juan Bucheli, Akash Doshi, June Namgoong, Taesang Yoo, Ashwin Sampth, Joseph Soriaga,

GLOBECOM, 2023

paper · bibtex

Transformer-based neural surrogate to model mmWave path losses in dense urban scenarios.

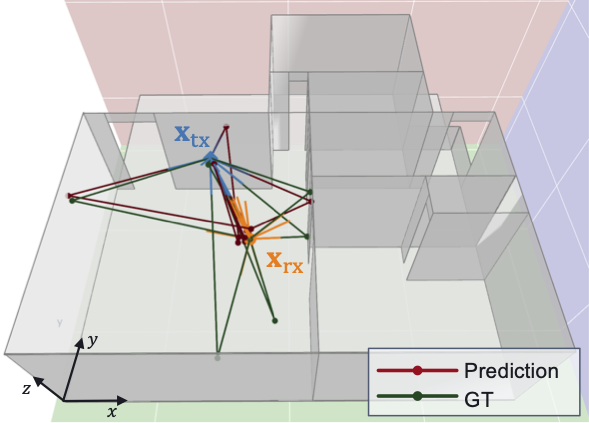

Tribhuvanesh Orekondy, Prateek Kumar, Shreya Kadambi, Hao Ye, Joseph Soriaga, Arash Behboodi

ICLR, 2023

paper · talk · dataset · bibtex

NeRF-like neural surrogate to model differentiable wireless electromagnetic propagation effects in indoor environments.

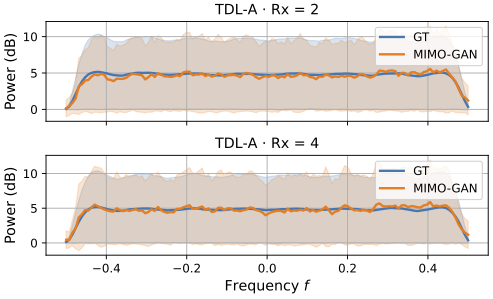

Tribhuvanesh Orekondy, Arash Behboodi, Joseph Soriaga

ICC, 2022

paper · bibtex

GAN-based surrogate simulator to learn distributions of channel impulse response complex waveforms.

Hui-Po Wang, Tribhuvanesh Orekondy, Mario Fritz

CVPR (Fair, Trusted, and Data Efficient Computer Vision workshop), 2021

paper · bibtex

An image obfuscation network to remove privacy attribute information (such as by inverting, or maximizing uncertainty), while retaining image fidelity.

Differential Privacy Defenses and Sampling Attacks for Membership Inference

Shadi Rahimian,

Tribhuvanesh Orekondy,

Mario Fritz

AISec, 2021

paper ·

bibtex

Differential Privacy approaches to defend against membership inference attacks.

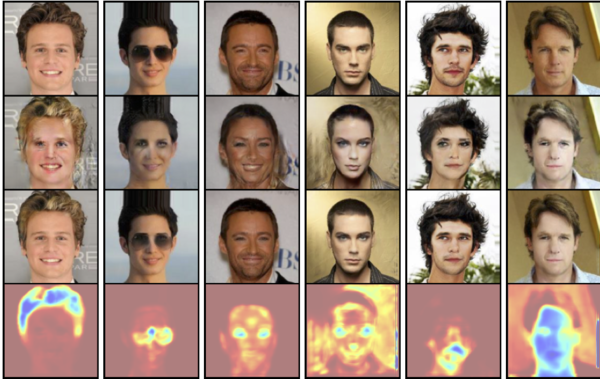

Dingfan Chen, Tribhuvanesh Orekondy, Mario Fritz

NeurIPS, 2020

paper · bibtex

A novel GAN to allow releasing sanitized forms of data with rigorous differential privacy guarantees.

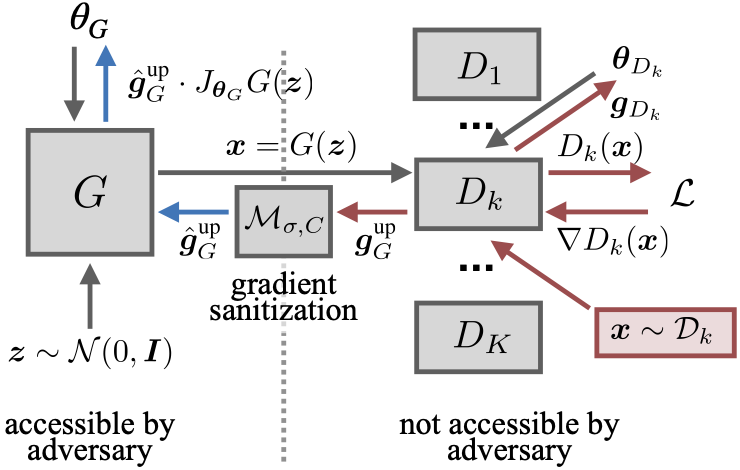

Tribhuvanesh Orekondy, Bernt Schiele Mario Fritz

ICLR, 2020

paper · project page · bibtex

An optimization-based defense against model stealing attacks, with perturbations crafted to poison resulting gradient signals.

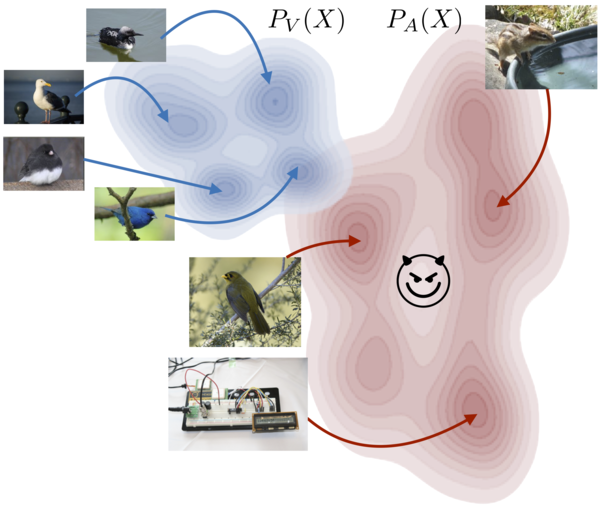

Tribhuvanesh Orekondy, Bernt Schiele Mario Fritz

CVPR, 2019

paper · poster · extended abstract (CV-COPS@CVPR) · project page · bibtex

Vision models encode meaningful information in predictions even on out-of-distribution natural images. We exploit this property to steal functionality of complex vision models.

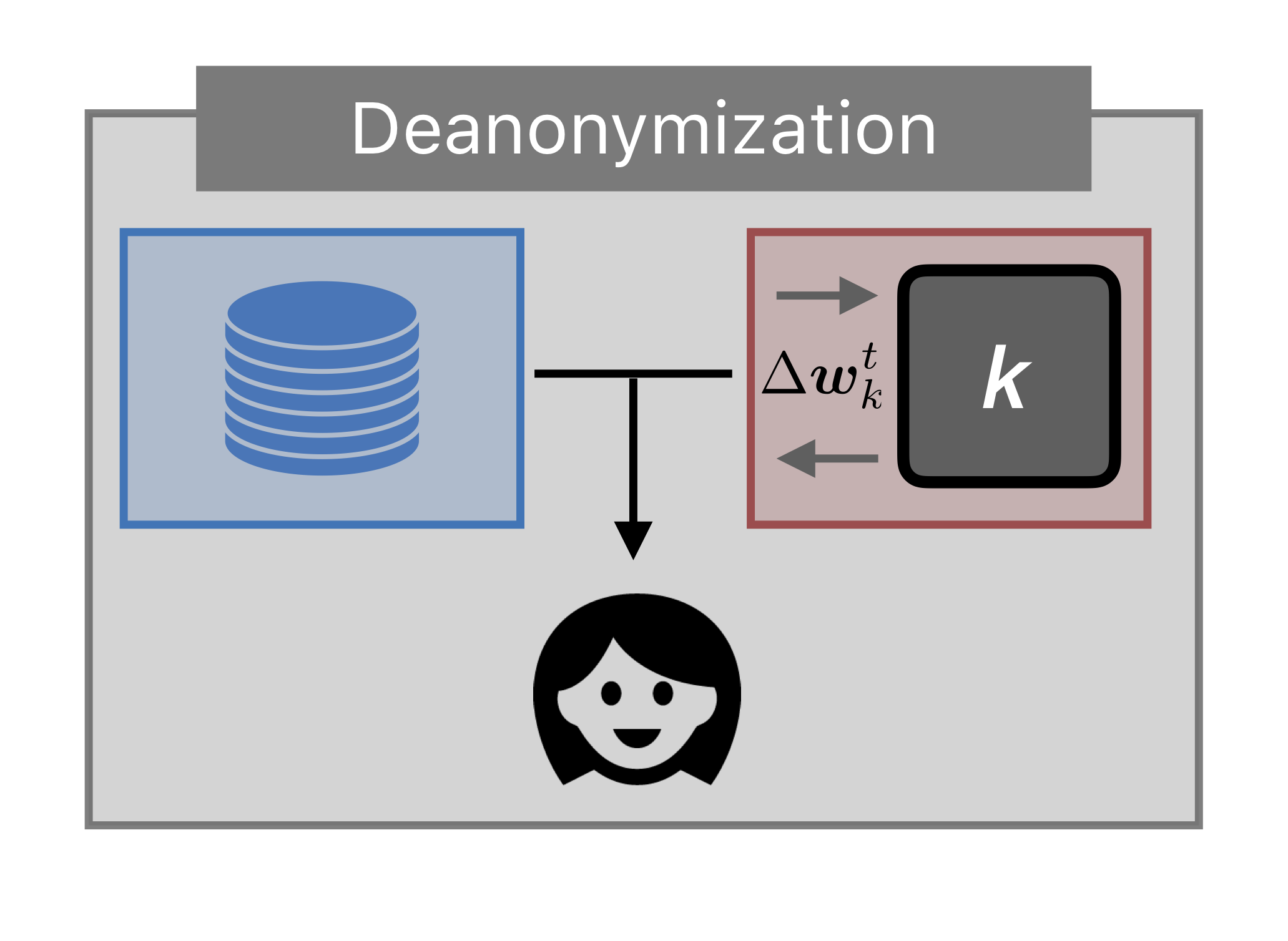

Gradient-Leaks: Understanding and Controlling Deanonymization in Federated Learning

Tribhuvanesh Orekondy,

Seong Joon Oh,

Yang Zhang,

Bernt

Schiele

Mario Fritz

FL NeurIPS, 2019

paper ·

poster ·

talk ·

bibtex

Gradient parameter deltas in Federated Learning encodes user bias statistics of participating devices, raising deanonymization concerns.

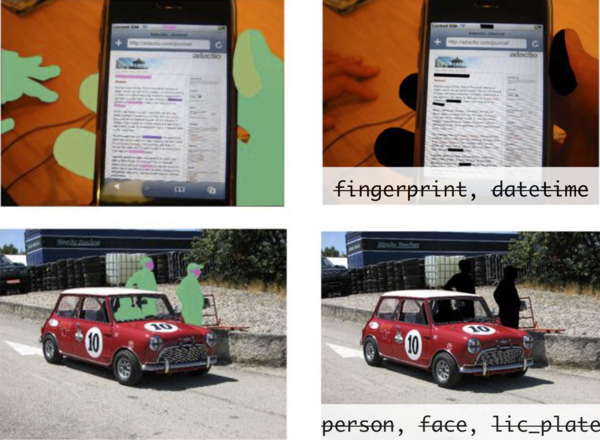

Connecting Pixels to Privacy and Utility: Automatic Redaction of Private

Information in Images

Tribhuvanesh Orekondy,

Mario Fritz,

Bernt

Schiele

CVPR, 2018 (Spotlight)

paper

·

poster

·

project page ·

video ·

bibtex

Automatic method to identify and redact a broad range of private information spanning multiple modalities in visual content.

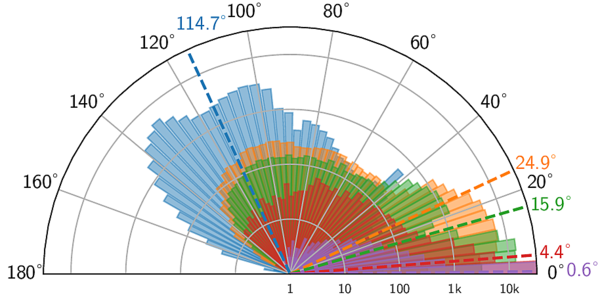

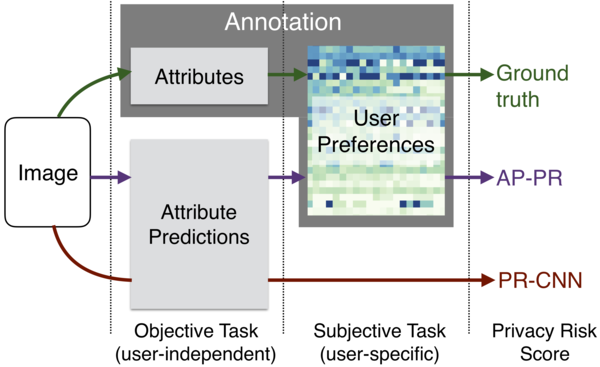

Towards a Visual Privacy Advisor: Understanding and Predicting Privacy Risks in Images

Tribhuvanesh Orekondy,

Bernt

Schiele,

Mario Fritz

ICCV, 2017

paper

·

poster

·

extended

abstract (VSM@ICCV)

·

project page ·

bibtex

An approach to understand and predict a wide spectrum of privacy risks in images.

HADES: Hierarchical Approximate Decoding for Structured Prediction

Tribhuvanesh Orekondy

(under supervision of

Martin Jaggi,

Aurelien Lucchi,

Thomas Hoffman

)

Master Thesis, 2016

paper

·

project page ·

bibtex

A fast structured output learning algorithm, which works by approximately decoding oracles to various extents.

Academic Activities

- Reviewing: CVPR '19, CV-COPS '19, TPAMI '19, ICCV '20, AAAI '20, CVPR '20, ECCV '20, NeurIPS '20, IJCV '20, WACV '21, CVPR '21, ICLR '21 (Outstanding reviewer award)

- Teaching Assistant: Machine Learning in Cyber Security, 2018, 2019

- Thesis co-supervision: Shadi Rahimian (MSc., University of Saarland), Jonas Klesen (BSc., University of Saarland)